We are excited to announce that registrations for the 53rd SEFI Annual Conference are now…

Emanuele Ratti (University of Bristol, the UK) and Avigail Ferdman (Technion, Israel Institute of Technology, Israel)

A major challenge in embedding ethics is to make a difference for the agency of students and practitioners. This is especially challenging when ethical principles are very abstract: students might feel they lack relevant skills to operationalize the abstract principles in concrete and complex cases, while also feeling disenfranchised in lacking political power to influence a situation. For example, as Wessel Reijers notes, in a corporate mentality of “move fast and break things”, an employee might be aware of their ethical responsibility to do the right thing, but the political structures of the organization might wholly obstruct their ability to act on it. As a result, students might feel powerless.

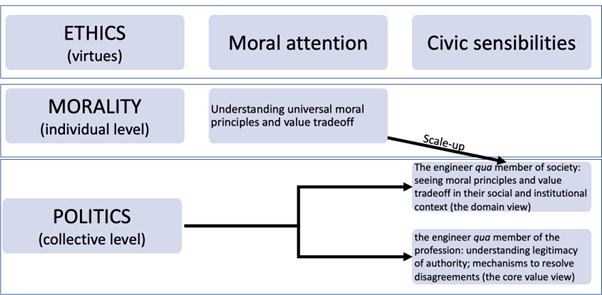

Fostering agency is therefore crucial: students should see themselves as being able to act. We think that there are two steps in fostering agency. The first is to actually understand that a certain technical situation requires moral considerations. The second step is to scale-up those considerations at a societal level, to identify what needs to be changed at that higher-level. In this way, practitioners would be able to identify moral issues, and they will habituate themselves to imagine those issues at a different scale. Embedded ethics should have as pedagogical goals the cultivation of two skills, or proto-virtues: moral attention and civic sensibility.

From understanding to agency: linking moral attention to civic sensibilities

In Ferdman & Ratti (2024), we conceptualize moral attention as the ability to recognize and understand morally relevant situations and value tradeoffs, albeit in abstract contexts. For example, in an embedded ethics on autonomous vehicles, the aim will be to expose the students to trolley-like problems and foster a deeper appreciation of the different (potentially incompatible) moral conceptions that are inevitably built into the behavior of the AI, e.g. utilitarianism vs. deontology. More specifically, the module would help the student detect value conflicts such as accuracy vs. efficiency, for example as manifest in the conflict between achieving representational accuracy and reducing overall accidents.

Civic sensibilities are skills or proto-virtues necessary for the engineer/computer scientist to act competently and morally as a citizen or member of a political community. One manifestation of civic sensibility is the disposition to uphold the just basic structure of society through toleration, respect for rights, respect for others’ autonomy and for institutions that administer justice. Another manifestation of civic sensibility is inherent in the engineer as a member of their professional community. Here the focus is on the professional context: for example, voicing concerns to one’s employer about technologies aimed at intrusive or predatory surveillance, or platforms that control the flow and dissemination of information in undemocratic ways; not working for ‘bad actors’; training machine learning systems with attention to structural inequalities (Ferdman and Ratti 2024); taking collective steps to counteract the harmful effects of the ‘move fast and break things’ mentality in big tech; addressing the ‘responsibility gap’ that exists with respect to emerging technologies like AI, where responsibility lies at the hands of the collective, but no individual can be held morally responsible (van de Poel, Royakkers, and Zwart 2015).

Linking moral attention to civic sensibilities

While the role of the moral dimension is to cast the normative lens on the question “what would you do”?, the ‘civic sensibilities’ dimension casts the normative lens on a different question: “what needs to change?” (Conlon 2022). The idea is that moral attention will sharpen students’ investigative capacities about moral problems, while always looking at these scale-up will sharpen their civic sensibilities. We think that learning how to visualize moral issues at a different scale, will foster a sense of agency that compartmentalized modules will not.